Gemini token limits serve as a cornerstone of its functionality, defining the scope and scale of its processing capabilities. With Gemini Pro and Gemini Advance leading the charge, Google’s Gemini AI platform heralds a new era of innovation in natural language processing.

Gemini Pro has an input token limit of 30,720 and an output token limit of 2,048, with a rate limit of 60 requests per minute. On the other hand, Gemini 1.5 Pro can process up to 1 million tokens in production, allowing it to handle vast amounts of information in one go.

As AI Analyst, In this article we delve into the depths of Gemini AI token limits, shedding light on their significance and the transformative potential they hold. By breaking traditional token limits and pushing the boundaries of AI performance, Google Gemini paves the way for a future where seamless communication and intelligent interaction are within reach. Embrace the power of Gemini AI and unlock the limitless possibilities that lie ahead.

What are Gemini Token Limits?

Gemini AI models operate within predefined token limits, which dictate the volume of input and output tokens they can process. These limits vary across different versions of Gemini AI, tailored to meet specific use cases and performance requirements.

Notably, Gemini Pro and Gemini Advance stand out as flagship offerings, each equipped with distinct token limits and capabilities. This topic is also discussed on LinkedIn because this is a worthy topic.

Gemini Pro Token Limits: Redefining Boundaries

Gemini Pro represents the pinnacle of Gemini AI sophistication, boasting formidable token limits that underscore its prowess in handling complex linguistic tasks. With an input token limit of 30,720 and an output token limit of 2,048, Gemini Pro sets a high standard for NLP models. This formidable capacity enables Gemini Pro to analyze extensive textual inputs and generate nuanced, contextually rich outputs with remarkable precision.

On the other hand, Gemini 1.5 Pro can process up to 1 million tokens in production, allowing it to handle vast amounts of information in one go

Beyond token limits, Gemini Pro also operates within a rate limit of 60 requests per minute, ensuring efficient utilization of computational resources while maintaining optimal performance. This combination of token limits and rate restrictions establishes Gemini Pro as a versatile and dependable solution for diverse AI-driven applications.

Gemini Advance Token Limits: Pushing the Boundaries Further

In the pursuit of pushing the boundaries of AI capabilities, Gemini Advance emerges as a game-changer with its enhanced token limits and extended processing capabilities. Unlike its predecessor, Gemini Advance can process up to 1 million tokens in production, a staggering leap that signifies a quantum leap in NLP performance.

This breakthrough capability significantly expands Gemini Advance’s capacity to handle vast amounts of information in a single iteration, making it ideal for applications requiring large-scale data processing and analysis. Whether deciphering intricate textual nuances or generating coherent responses across diverse domains, Gemini Advance rises to the occasion with unparalleled efficiency and scalability.

How Unlocking the Potential of Gemini AI Token Limits?

The implications of Gemini AI token limits extend far beyond numerical thresholds, encapsulating the essence of its transformative impact on AI-driven communication. By transcending conventional constraints, Gemini AI empowers users to harness the full potential of NLP technology, unleashing a new era of innovation and creativity.

Breaking Token Limits: Creating a New Future

The recent unveiling of Gemini 1.5 Pro marks a significant milestone in the evolution of Gemini AI, signaling a paradigm shift in token limits and processing capabilities. With the ability to process up to 1 million tokens in production, Gemini 1.5 Pro transcends previous constraints, opening doors to new possibilities in AI-driven communication.

This monumental leap not only enhances the model’s capacity to process information efficiently but also underscores Google’s commitment to pushing the boundaries of AI innovation. As Gemini AI continues to evolve and adapt to evolving user needs, the future holds boundless opportunities for leveraging its transformative capabilities across diverse domains.

How Does Google geminin’s Token Limit Compare to other AI Models?

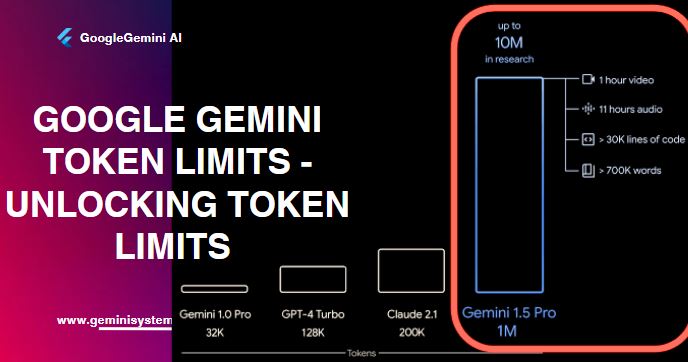

Gemini 1.5 Pro boasts an impressive token limit of up to 1 million tokens in production, far surpassing other AI models such as GPT-4, which only allows 32,000 tokens. Comparatively, ChatGPT 3.5 typically permits 4,097 tokens, while ChatGPT 4 offers a token limit of 4,000 tokens. Additionally, the GPT-4 API provides an 8,000 token limit. It’s worth noting that token size limits can vary among different AI models and their versions, affecting the amount of text that can be processed.

FAQs

Here are some frequently asked questions (FAQs) regarding Gemini AI token limits:

What are token limits in Gemini AI?

Token limits in Gemini AI refer to the maximum number of input and output tokens that the model can process within a given timeframe. These limits vary depending on the specific version of Gemini AI, such as Gemini Pro or Gemini Advance.

Why are token limits important in Gemini AI?

Token limits are crucial as they determine the model’s capacity to handle textual data effectively. By defining the maximum number of tokens that can be processed, these limits ensure optimal performance and resource utilization, preventing overload and degradation of output quality.

What is the token limit for Gemini Pro?

Gemini Pro has an input token limit of 30,720 and an output token limit of 2,048. Additionally, it operates within a rate limit of 60 requests per minute, ensuring efficient processing and response times.

How does Gemini Advance differ from Gemini Pro in terms of token limits?

Gemini Advance surpasses Gemini Pro in token limits, with the ability to process up to 1 million tokens in production. This substantial increase in processing capacity enables Gemini Advance to handle larger volumes of textual data with enhanced efficiency and scalability.

Can the token limits of Gemini AI be adjusted or customized?

The token limits of Gemini AI are predefined based on the specific version and use case. While users may not directly adjust these limits, Google continually refines and updates Gemini AI to optimize performance and accommodate evolving user needs.

What happens if the token limits of Gemini AI are exceeded?

Exceeding the token limits of Gemini AI may result in degraded performance or processing errors. To maintain optimal functionality, it’s essential to adhere to the prescribed token limits and consider upgrading to a higher-tier version, such as Gemini Advance, for handling larger datasets.

Are there any resources available for developers to learn more about Gemini AI token limits?

Yes, developers can explore official documentation, blog posts, and technical articles provided by Google to gain insights into Gemini AI token limits and best practices for leveraging its capabilities effectively.

How do Gemini AI token limits contribute to the advancement of natural language processing (NLP) technology?

By defining the boundaries of computational processing, Gemini AI token limits drive innovation in NLP technology, facilitating the development of more sophisticated models capable of understanding and generating human-like textual content with unprecedented accuracy and fluency.

Can users expect future updates or expansions to Gemini AI token limits?

Google continuously evaluates and enhances the capabilities of Gemini AI, including token limits, to address emerging challenges and opportunities in the field of AI-driven communication. Users can anticipate ongoing improvements and refinements to optimize performance and expand functionality.

How can businesses leverage Gemini AI token limits to enhance their operations?

Businesses can leverage Gemini AI token limits to streamline text analysis, content generation, and communication processes. By understanding and adhering to these limits, organizations can harness the full potential of Gemini AI to drive innovation, improve efficiency, and deliver superior user experiences.